10. TLS Proxy with NGINX#

10.1. Introduction#

TLS proxy is an intermediate entity/application which sits between a server and client where at least one of them uses TLS for their communication. Based on the type of proxy, it optionally encrypts/decrypts traffic b/w server and client applications. There are different types of TLS proxies

TLS termination/Reverse Proxy Deployed on the server side. The proxy terminates the TLS connection from the client and sends the decrypted payload to the backend non-TLS application server. Used for adding security, load balancing, caching capabilities to the backend server

TLS forwarding Proxy Forward proxy is commonly deployed on the client side. Clients are configured to route their traffic via proxy. Proxy decrypts the connection b/w client and itself. Proxy establishes a separate TLS connection with server which is used for communicatio b/w proxy and server.

Intercepting Proxy Also known as Man-in-the-middle proxy, it intercepts the traffic b/w client and server. It intercepts and inspects the TLS traffic by creating a secure connection with the client and another with the destination server. This type of proxy is typically deployed in the network gateways where TLS traffic inspection is required.

The remainder of the document concentrates mainly on TLS termination proxy.

10.2. NGINX Brief#

NGINX is an HTTP and reverse proxy server, a mail proxy server, a generic TCP/UDP proxy server. It is widely used both as web/proxy server throughout the internet. The rest of the document uses NGINX to configure and use it as a TLS termination proxy.

10.3. NGINX as TLS termination proxy#

10.3.1. Usage/deployment Model#

This section discusses on the steps to deploy NGINX as a TLS termination proxy.

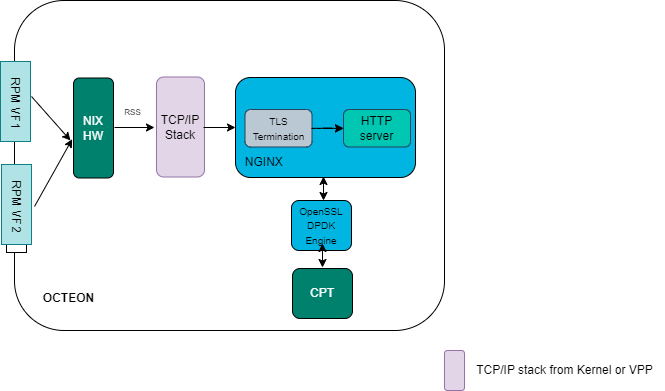

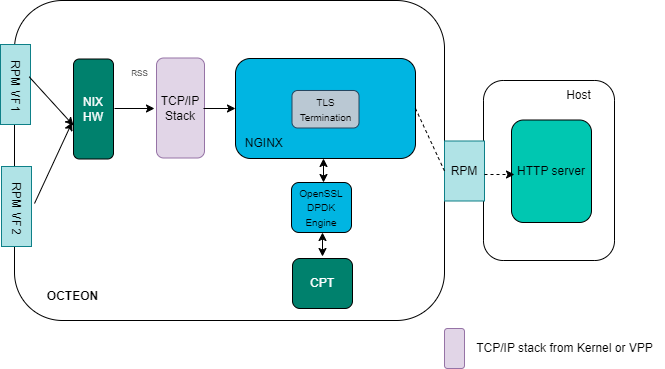

10.3.2. NGINX Architecture Diagram#

NGINX as TLS termination proxy with inbuilt web server

NGINX as TLS termination proxy with separate backend web/application server

10.3.3. NGINX acceleration on OCTEON#

NGINX application provided in this solution is modified to add following features

Changes required to leverage asynchronous mode in OpenSSL

Changes required to leverage engine framework in OpenSSL for crypto acceleration

Changes required to use dpdk engine via OpenSSL engine framework

Changes required to leverage pipelining support in OpenSSL

The modified NGINX has better performance as it offloads crypto operations such as RSA and ECDSA sign/verify operations to CPT via dpdk engine interface. The asynchronous feature allows NGINX not to waste CPU cycles while crypto operations are offloaded to CPT via dpdk engine framework. The pipelining feature also allows NGINX to utlizie pipeline support exposed by openssl for burst submission of crypto operations to CPT. This allows the NGINX application to amortize the crypto operation submission to CPT over a burst of packets. It also allows for multiple submissions when the user data is broken into multiple TLS records.

10.4. Steps to Install#

There are two methods of installation: Installing the Ubuntu Debian package or Compiling from Sources. The following sections describe both methods.

10.4.1. Ubuntu Debian package#

Before downloading the NGINX package, make sure ubuntu repository is setup properly Setting up Ubuntu repo for DAO

10.4.1.1. Installing the NGINX package#

Release version

~# apt-get install openssl-engine-1.0.0-cn10k

~# apt-get install nginx-1.22.0-cn10k

Note

Installing openssl-engine-1.0.0-cn10k will automatically bring in several essential DAO components as dependencies. These include:

cpt-firmware-cn10kdpdk-25.11-cn10kopenssl-1.1.1q-cn10k

After the installation of openssl-1.1.1q-cn10k, LD_LIBRARY_PATH should be set as shown below to load the correct libraries for the installed openssl version:

~# export LD_LIBRARY_PATH=/usr/lib/cn10k/openssl-1.1.1q/lib:$LD_LIBRARY_PATH

10.4.2. Compiling from Sources#

Note

Follow the steps to compile from sources.

10.5. Environment Setup#

10.5.1. Run the below script (once after every reboot) to create CPT VFs and bind to vfio-pci:#

10.5.1.1. For CN9K board:#

sh /usr/share/openssl-engine-dpdk/openssl-engine-dpdk-otx2.sh /bin/dpdk-devbind.py

10.5.1.2. For CN10K board:#

sh /usr/share/openssl-engine-dpdk/openssl-engine-dpdk-cn10k.sh /bin/dpdk-devbind.py

Note

For proper OpenSSL acceleration with NGINX, it is necessary to bind CPT virtual functions (VFs) to the vfio-pci driver with the number of VFs equal to the system’s logical CPU count. This is because the OpenSSL engine maps each VF to a CPU core’s CPUID, and NGINX worker threads can run on any core. The provided setup scripts dynamically create and bind the appropriate number of CPT VFs based on the logical CPU count (commonly 24), ensuring each worker core has access to a dedicated VF. If manual control over worker core to VF mapping is needed, it can be configured via the cptvf_queues parameter in the openssl.cnf file.

10.5.2. NGINX configuration#

~# cat <path-to-conffile>/<nginx-config-file>.conf

10.5.2.1. NGINX as HTTPS Server#

Following example async_nginx.conf allows user to run nginx with inbuilt HTTP server

user root root;

daemon off;

worker_processes 1;

error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

load_module modules/ngx_ssl_engine_cpt_module.so;

ssl_engine {

use_engine dpdk_engine;

default_algorithms ALL;

cpt_engine {

cpt_poll_mode heuristic;

cpt_offload_mode async;

#cpt_notify_mode poll;

#cpt_heuristic_poll_asym_threshold 24;

}

}

events {

use epoll;

worker_connections 1024;

multi_accept on;

accept_mutex off;

}

http {

keepalive_timeout 300s;

connection_pool_size 1024;

keepalive_requests 1000000;

access_log off;

server {

listen 443 ssl default_server;

ssl_certificate /etc/nginx/certs/server.crt.pem;

ssl_certificate_key /etc/nginx/certs/server.key.pem;

ssl_client_certificate /etc/nginx/certs/rootca.crt.pem;

ssl_asynch on;

ssl_max_pipelines 8;

root /var/www/html;

index index.html index.htm index.nginx-debian.html;

server_name _;

location / {

try_files $uri $uri/ =404;

}

}

# Port 443 - SSL

#include /etc/nginx/sites-enabled/*;

# Port 80 - TCP

#include /etc/nginx/sites-available/*;

}

10.5.2.2. NGINX as TLS Forwarder#

Following example tls-proxy-forwarding-async_nginx.conf allows users to configure NGINX to work as HTTPS forwarding proxy where both connections from SSL client to NGINX and NGINX to backend server are secured using SSL:

user root root;

daemon off;

worker_processes 1;

error_log logs/error.log;

load_module modules/ngx_ssl_engine_cpt_module.so;

ssl_engine {

use_engine dpdk_engine;

default_algorithms ALL;

cpt_engine {

cpt_poll_mode heuristic;

cpt_offload_mode async;

}

}

events {

use epoll;

worker_connections 65536;

multi_accept on;

accept_mutex off;

}

http {

keepalive_timeout 300s;

connection_pool_size 1024;

keepalive_requests 1000000;

access_log off;

server {

listen 443 ssl default_server;

ssl_certificate /etc/nginx/certs/server.crt.pem;

ssl_certificate_key /etc/nginx/certs/server.key.pem;

ssl_client_certificate /etc/nginx/certs/rootca.crt.pem;

ssl_asynch on;

ssl_max_pipelines 8;

root /var/www/html;

index index.html index.htm index.nginx-debian.html;

server_name _;

location / {

# where 2.0.0.2:443 is the backend HTTPS server:port

proxy_pass https://2.0.0.2/;

}

}

}

10.5.2.3. NGINX as TLS Initiator#

Following example backend-https-async_nginx.conf users to configure NGINX to work as a SSL proxy client to HTTP clients and communicate with HTTPS servers using SSL:

user root root;

daemon off;

worker_processes 1;

error_log logs/error.log;

load_module modules/ngx_ssl_engine_cpt_module.so;

ssl_engine {

use_engine dpdk_engine;

default_algorithms ALL;

cpt_engine {

cpt_poll_mode heuristic;

cpt_offload_mode async;

}

}

events {

use epoll;

worker_connections 65536;

multi_accept on;

accept_mutex off;

}

http {

keepalive_timeout 300s;

connection_pool_size 1024;

keepalive_requests 1000000;

access_log off;

server {

# the Client connects to 8000 port on NGINX

listen 8000;

ssl_certificate /etc/nginx/certs/server.crt.pem;

ssl_certificate_key /etc/nginx/certs/server.key.pem;

ssl_max_pipelines 8;

root /var/www/html;

index index.html index.htm index.nginx-debian.html;

server_name _;

location / {

# where 2.0.0.2:443 is the backend HTTPS server:port

proxy_pass https://2.0.0.2/;

}

}

}

10.5.3. OpenSSL configuration#

~# cat /opt/openssl.cnf

10.5.3.1. OpenSSL example conf#

#

# OpenSSL dpdk_engine Configuration File

#

# This definition stops the following lines choking if HOME isn't

# defined.

HOME = .

openssl_conf = openssl_init

[ openssl_init ]

engines = engine_section

[ eal_params_section ]

eal_params_common = "E_DPDKCPT --socket-mem=500 -d librte_mempool_ring.so"

eal_params_cptpf_dbdf = "0002:20:00.1"

[ engine_section ]

dpdk_engine = dpdkcpt_engine_section

[ dpdkcpt_engine_section ]

dynamic_path = /opt5/openssl-engine-dpdk/dpdk_engine.so

eal_params = $eal_params_section::eal_params_common

# Append process id to dpdk file prefix, turn on to avoid sharing hugepages/VF with other processes

# If setting to no, manually add --file-prefix <name> to eal_params

eal_pid_in_fileprefix = yes

# Append -l <sched_getcpu()> to eal_params

# If setting to no, manually add -l <lcore list> to eal_params

eal_core_by_cpu = yes

# Whitelist CPT VF device

# Choose CPT VF automatically based on core number

# replaces dd.f (device and function) in below PCI ID based on sched_getcpu

eal_cptvf_by_cpu = $eal_params_section::eal_params_cptpf_dbdf

cptvf_queues = {{0, 0}}

engine_alg_support = ALL

# Crypto device to use

# Use openssl dpdk crypto PMD

# crypto_driver = "crypto_openssl"

# Use crypto_cn10k crypto PMD on cn10k

#crypto_driver = "crypto_cn10k"

# Use crypto_cn9k crypto PMD on cn9k

crypto_driver = "crypto_cn9k"

engine_log_level = ENG_LOG_INFO

init=0

10.6. Launching the application#

10.6.1. Running the Proxy Application#

~# OPENSSL_CONF_MULTI=<path-to-conffile>/openssl.cnf <path-to-nginx-bin>/sbin/nginx -c <path-to-conffile>/async_nginx.conf

NOTE: Path to NGINX application = /usr/local/nginx/

10.6.2. Functional Testing of the Proxy#

~# ab -i -c1 -n1 -f TLS1.2 -Z AES128-GCM-SHA256 https://<nginx-dut-ip>/test/<FILE_SIZE>.html

NOTE: A file <FILE_SIZE>.html (eg: 4MB.html) has to be created in the directory where nginx application is executed.

10.6.3. Performance Testing of the Proxy#

10.6.3.1. Kernel Parameters Tuning for best performance#

Following kernel parameters should be set for load testing of nginx as well as to achieve optimal performance from linux kernel tcp stack.

~# sysctl net.core.rmem_max=33554432

~# sysctl net.core.wmem_max=33554432

~# sysctl net.ipv4.tcp_rmem="4096 87380 33554432"

~# sysctl net.ipv4.tcp_wmem="4096 65536 33554432"

~# sysctl net.ipv4.tcp_window_scaling

~# sysctl net.ipv4.tcp_timestamps

~# sysctl net.ipv4.tcp_sack

~# ifconfig enP2p5s0 txqueuelen 5000

~# echo 30 > /proc/sys/net/ipv4/tcp_fin_timeout

~# echo 30 > /proc/sys/net/ipv4/tcp_keepalive_intvl

~# echo 5 > /proc/sys/net/ipv4/tcp_keepalive_probes

~# echo 1 > /proc/sys/net/ipv4/tcp_tw_recycle

~# echo 1 > /proc/sys/net/ipv4/tcp_tw_reuse

~# sysctl net.ipv4.tcp_tw_recycle=1

~# sysctl net.ipv4.tcp_no_metrics_save=1

~# sysctl net.core.netdev_max_backlog=30000

~# sysctl net.ipv4.tcp_congestion_control=cubic

~# echo 5 > /proc/sys/net/ipv4/tcp_fin_timeout

10.6.3.2. Performance measurement using ab client#

The apache benchmark utility (ab) can be used to benchmark nginx

~# ab -i -c64 -n10000 -f TLS1.2 -Z AES128-GCM-SHA256 https://<nginx-dut-ip>/test/<FILE_SIZE>.html

10.6.3.3. Performance using h2load client#

~# h2load -n 10000 -c 64 --cipher=AES128-GCM-SHA256,2048,256 https://<nginx-dut-ip>/test/<FILE_SIZE>.html

10.6.3.4. Application running demo#

Note

For OpenSSL engine usage with NGINX, ‘init’ parameter should be set to 0 as shown below, in the openssl.cnf file.

init = 0

For other applications such as OpenSSL speed, s_server, s_client, ‘init’ parameter should be set to 1, as shown below in the openssl.cnf file.

init = 1