15. VirtIO Block Library#

Virtio Block library utilizes the VirtIO library, a component of DAO, to provide VirtIO block functionality for supported applications. The VirtIO block library offers APIs for block I/O applications running on OCTEON. It enables the provisioning of OCTEON block devices as VirtIO block devices to the host/VMs on host.

15.1. Features#

Currently, Virtio Block emulation supports the VirtIO 1.3 specification, offering the following features:

15.1.1. VirtIO Common Feature Bits#

VIRTIO_F_RING_PACKED

VIRTIO_F_ANY_LAYOUT

VIRTIO_F_IN_ORDER

VIRTIO_F_ORDER_PLATFORM

VIRTIO_F_IOMMU_PLATFORM

VIRTIO_F_NOTIFICATION_DATA

15.1.2. VirtIO Block Feature Bits#

VirtIO Block Specification supports the following features. Depending on the underlying block device type, the feature set varies:

VIRTIO_BLK_F_SIZE_MAX

VIRTIO_BLK_F_SEG_MAX

VIRTIO_BLK_F_BLK_SIZE

VIRTIO_BLK_F_FLUSH

VIRTIO_BLK_F_DISCARD

VIRTIO_BLK_F_TOPOLOGY

VIRTIO_BLK_F_WRITE_ZEROES

VIRTIO_BLK_F_GEOMETRY

VIRTIO_BLK_F_RO

VIRTIO_BLK_F_CONFIG_WCE

VIRTIO_BLK_F_MQ

VIRTIO_BLK_F_LIFETIME

VIRTIO_BLK_F_SECURE_ERASE

VIRTIO_BLK_F_ZONED

15.1.3. Notes on Supported Features#

Only Packed virtqueues (VIRTIO_F_RING_PACKED) are supported.

Modern devices are supported; legacy devices are not supported.

The implementation requires extra data (besides identifying the virtqueue) in device notifications (VIRTIO_F_NOTIFICATION_DATA). This is a mandatory feature to be enabled by Host/Guest.

Using VIRTIO_F_ORDER_PLATFORM is mandatory for proper functioning of smart NIC as it ensures memory ordering between Host and Octeon DPU.

Current implementation of VirtIO block supports only in-order processing of the requests.

Zoned block devices are not supported.

15.2. VirtIO Emulation Architecture Overview#

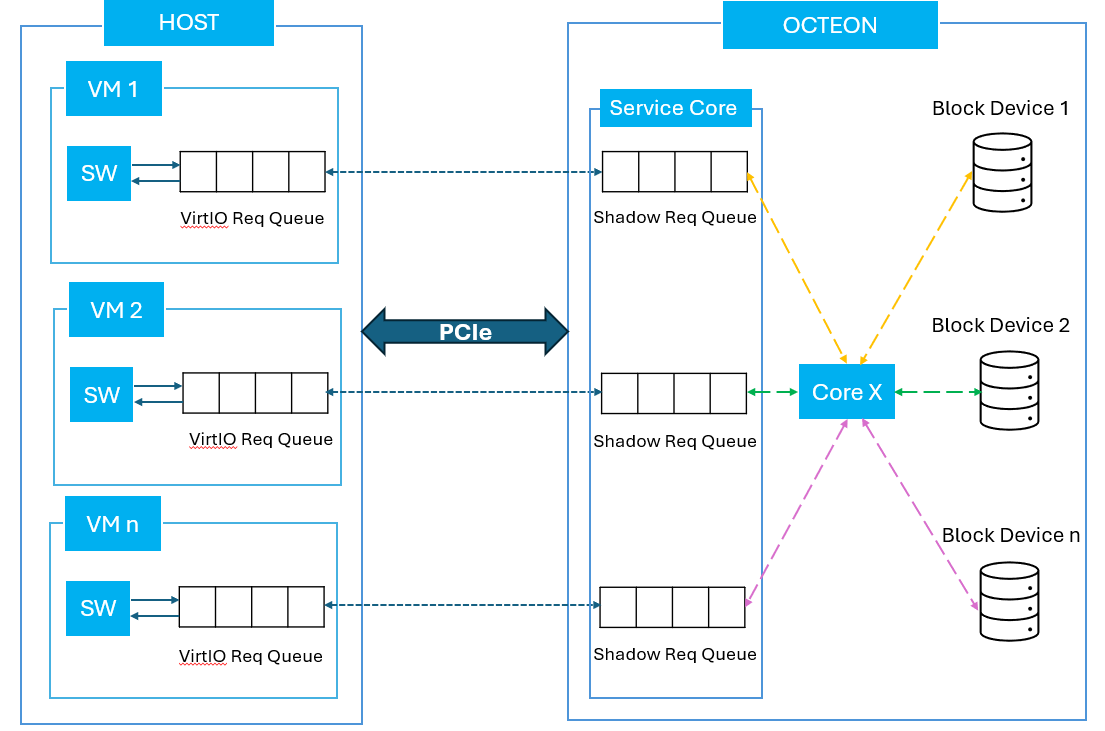

This design fosters a scalable architecture with an emulation software core serving two crucial roles: the service core and worker core. In the diagram below, Core X refers to a worker core, and each worker core works on its own shadow virtio request queue.

The service core acts as the overseer, managing the virtio queue descriptors exchanged between the host and FW. Its responsibilities include determining the queue depth, tracking head and tail pointers, and marking buffers as available or in-use. Meanwhile, the worker cores leverage the services provided by the service core, effectively facilitating the movement of requests/responses between the host and FW.

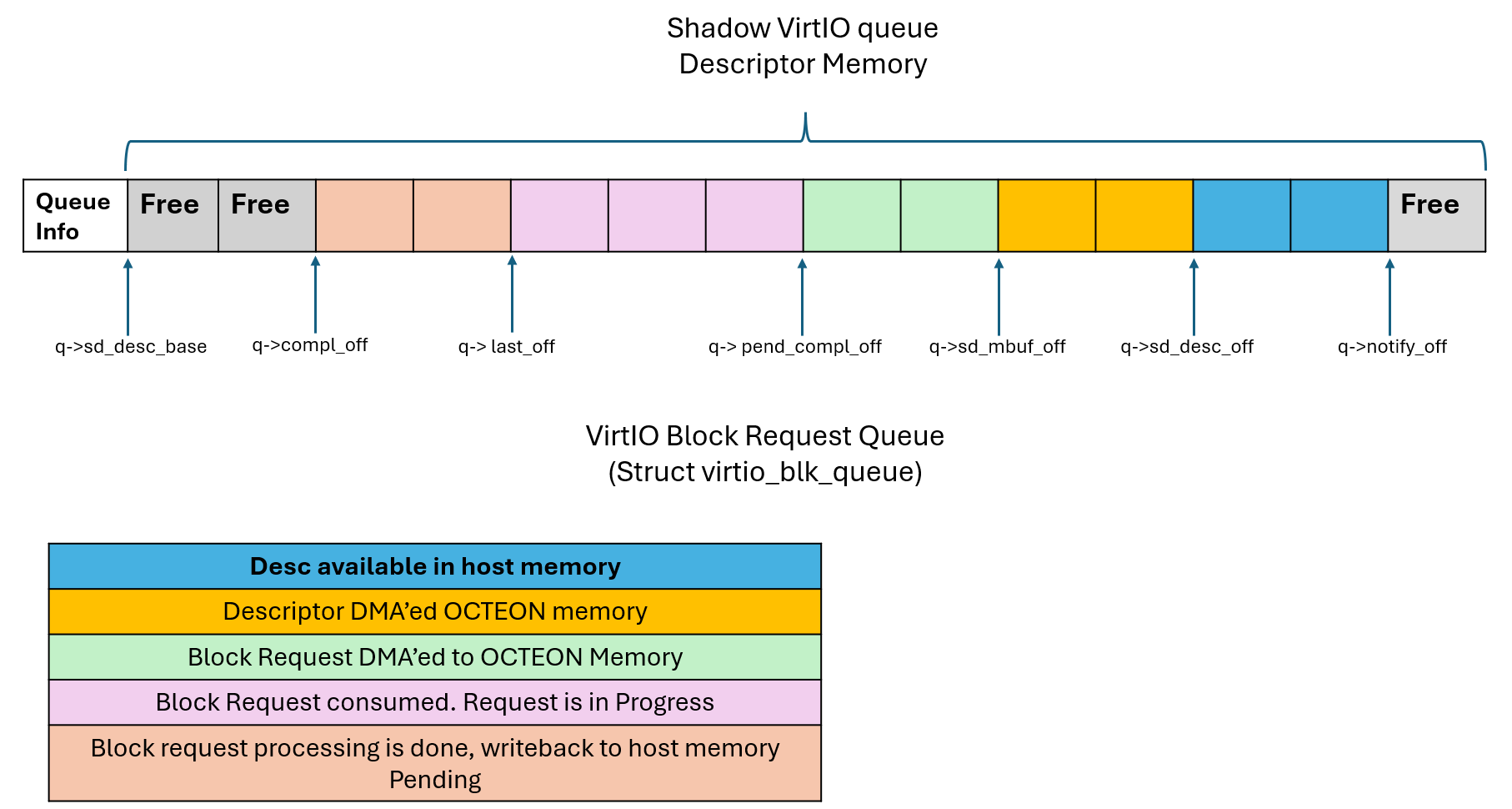

The above figure depicts virtio-blk library’s internal architecture. Each portion of the descriptor area follows several stages of processing one after the other. The process is triggered by notification data update to move the tail/avail index to the end of available descriptors. This triggers the service core calling dao_virtio_blkdev_desc_manage() to initiate a DMA fetch of descriptor data.

15.3. VirtIO Block Device Usage#

This section covers how to identify, initialize, and use a VirtIO block device.

15.3.1. VirtIO Block Device Identification#

Each VirtIO block device is designated by a unique device index starting from 0 in all functions. Currently, this library supports a maximum of 64 VirtIO block devices, one-to-one mapped to a PEM VF. VirtIO devid[11:0] indicates VF number, while devid[15:12] indicates PF number corresponding to the VF. A VirtIO device is always connected to Host VF. Host PF doesn’t have a VirtIO device representation in the library.

15.3.2. VirtIO Block Device Initialization#

Initialization of a VirtIO block device typically includes the following operations:

Create and initialize a block device with the block device library based on the type of block device required using the dao_blk_dev_create() API.

Initialize the base VirtIO device using the virtio_dev_init() API, which populates the VirtIO capabilities to be available to the host.

Configure the device with parameters in dao_virtio_blkdev_conf.

Below is the signature of the dao_virtio_blkdev_init() API:

struct dao_virtio_blkdev_conf {

/** PEM device ID */

uint16_t pem_devid;

/** Block device capacity in sectors */

uint64_t capacity;

/** Block size */

uint32_t blk_size;

/** Max segment size */

uint32_t seg_size_max;

/** Max segments */

uint32_t seg_max;

/** Vchan to use for this virtio dev */

uint16_t dma_vchan;

#define DAO_VIRTIO_BLKDEV_EXTBUF DAO_BIT_ULL(0)

/* Config flags */

uint16_t flags;

union {

struct {

/** Default dequeue mempool */

struct rte_mempool *pool;

};

/** Valid when DOS_VIRTIO_BLKDEV_EXTBUF is set in flags */

struct {

uint16_t dataroom_size;

};

};

/** Max virt_queues */

uint16_t max_virt_queues;

/** Feature bits */

uint64_t feat_bits;

/** Auto free enabled/disabled */

bool auto_free_en;

};

int dao_virtio_blkdev_init(uint16_t devid, struct dao_virtio_blkdev_conf *conf);

15.3.3. VirtIO Queues#

Application is expected to get the active virt queues count using dao_virtio_blkdev_queue_count and equally distribute the block request queues among all the subscribed lcores.

15.3.4. VirtIO Block Data Path#

The data path of a VirtIO block application typically consists of the following steps executed in a continuous loop:

Dequeue a block IO request from the VirtIO queue.

Process the block IO request.

Request completion marking.

15.3.5. Request Dequeue#

As part of the data path loop, worker cores continuously poll for VirtIO block requests on the input request queue. The typical request dequeue process is shown below:

Poll for new block IO request on the input queue using dao_virtio_blk_dequeue_burst(uint16_t devid, uint16_t qid, void **vbufs, uint16_t nb_bufs): - devid and qid are the virtio device ID and queue ID on which the core polls for the IO request. - It internally involves DMA of IO requests, DMA of data buffer (needed by write request). - As part of this call, the library reads multiple requests available in the request queue and dequeues maximum requests as per the nb_bufs argument.

vbufs points to the raw buffer where request data and metadata are stored for further processing of the IO request: - Metadata (per vbuf) includes virtio_blk_hdr (per IO request) and segment information needed for each request. - Each vbuf in vbufs array corresponds to a single block IO request.

After this, the core processes the IO requests corresponding to each mbuf dequeued as part of step (2). The processing of IO request is explained as part of virtio-blockio application.

15.3.6. Request Completion Marking#

Completion marking of the block IO request means that the request submitted by the driver is completed and the response is ready to be returned. In such cases, once the request processing is completed, the block IO app calls dao_virtio_blk_process_compl(uint16_t devid, uint16_t qid, void **vbufs, uint16_t nb_compl=1).

As part of this, the following operations are executed:

For read requests, the DMA of data and block IO status from OCTEON to host memory is issued. For other requests, block IO request status is updated from OCTEON to Host memory.

Fetch DMA status and update the shadow mbuf offset, so that the service core can mark the descriptors as used based on the shadow mbuf offset.

15.3.7. VirtIO Descriptors Management#

The virtio blk library provides an API for managing virtio descriptors. It performs the following operations:

Determine the number of descriptors available by polling on virt queue notification address.

Issue DMA using DPDK DMA library to copy the descriptors to shadow queues.

Pre-allocate buffers for actual block IO request data. Worker cores check the shadow queue for the available descriptors and issue DMA for the data using these buffers.

Fetch all DMA completions.

Mark used virtio descriptors as used in Host descriptor memory.

The dao_virtio_blk_desc_manage() API is used to manage the virtio descriptors. Application is expected to call this from a service core as frequently as possible to shadow descriptors between Host and Octeon memory.

dao_virtio_blk_desc_manage(uint16_t dev_id, uint16_t qp_count);

The parameter qp_count specifies the active virtio queue count. Below is the sample code to get qp_count:

virt_q_count = dao_virtio_blkdev_queue_count(virtio_devid);